Many of us have spent all our careers working on the application of technology to improve business performance. All of these investments in technology drove either greater business efficiency or revenue growth using traditional computer systems that input data, processed it and produced some form of output. The input and output would be in a predictable and well defined format and the processing would use IFthisTHENthat type logic we refer to as deterministic behaviour. These systems were predictable and could be tested by planning test data sets then ensuring that system outputs met the expected results based on the test data. Due to the rigidity – the cost of setting up these systems and integrating them into the business were high and the users would often find these systems didn’t capture all the nuances of the process and shadow IT would prevail.

This year in retail we have entered the era of AI Agents – autonomous systems capable of planning, executing complex workflows, and making decisions without direct supervision. The theoretical promise of AI agents is seductive: a frictionless retail machine where inventory manages itself, pricing adjusts instantly to demand, and customer service issues are resolved in milliseconds.

But there is a dangerous gap between what an AI agent technically can do and what it should do.

Current AI models excel at pattern recognition at scale, but they notoriously fail at context, empathy, ethical judgment, and handling “black swan” events. Fully automating certain processes with AI agents would be considered reckless.

For many critical retail functions, the most effective approach is not full autonomy, but a Human-in-the-Loop (HITL) system. In these workflows, AI handles the heavy lifting of data processing, but a human serves as the final “circuit breaker” for high-stakes decisions.

Below are specific examples across the retail landscape where removing the human element creates unacceptable risks.

Customer Experience

While AI can process data faster than any human, it possesses no emotional intelligence. It cannot read the room, detect sarcasm, or offer genuine compassion. When an AI agent rigidly applies policy to a sensitive human situation, the damage to loyalty could be permanent.

Here are examples of processes that risk offending your most valuable customers or mishandling sensitive issues.

| Retail Process | Can it be technically automated? | Why it requires a Human-in-the-Loop |

| Styling Advice | Yes. Agents can recommend outfits based on past purchase history and trend forecasting data. | A customer might request clothes for an event while dealing with body image issues or sudden weight changes. An AI might suggest a “trending” fit that triggers the customer’s insecurity based on cold data. A human stylist reads emotional subtext to build confidence. |

| Social Media Complaint Response | Yes. Agents can auto-reply to negative tweets/comments with apologies and support links. | If a customer complains about a serious safety issue (e.g., “Your product caught fire”), an automated “We’re sorry to hear that! DM us for a coupon!” sounds dismissive and litigious. A human needs to identify and escalate safety threats immediately. |

Buying & Merchandising

Supply chain AI is great at predicting the predictable based on historical data. However, the real world is increasingly defined by the unpredictable – weather disasters, geopolitical shifts, or overnight viral trends. An autonomous agent acting solely on backward-looking data can lead to disastrous financial waste or empty shelves during critical moments.

| Retail Process | Can it be technically automated? | Why it requires a Human-in-the-Loop |

| Seasonal Inventory Purchasing | Yes. Agents can forecast demand based on last year’s sales and order stock automatically. | AI cannot predict a sudden supply chain blockage (like a canal obstruction) or a TikTok trend that changes demand overnight. Without human oversight, the AI might order 50k units of a fad that died yesterday, or fail to secure stock during a surprise shortage. |

| Supplier Negotiation | Yes. Agents can negotiate price and delivery terms with vendors via email/chat using defined parameters. | Retail relies on long-term vendor partnerships. An AI agent might aggressively squeeze a supplier for a 1% discount based on its goal function, causing the supplier to walk away entirely. A human buyer knows when to accept a slightly higher price today to maintain a critical relationship for tomorrow. |

Pricing and Promotions

Dynamic pricing is a powerful tool, but it requires an ethical compass that AI lacks. Algorithms are designed to maximise profit based on supply and demand curves. They do not understand concepts like “price gouging” during an emergency or the long-term damage of training customers to game your system.

| Retail Process | Can it be technically automated? | Why it requires a Human-in-the-Loop |

| Dynamic Pricing (Surge Pricing) | Yes. Agents can adjust shelf/online prices in real-time based on demand and competitor pricing. | If a severe weather event hits, demand for critical supplies would spike. An AI agent will logically raise prices to maximise profit, leading to accusations of price gougin and public backlash. A human should intervene to freeze prices during emergencies. |

Loss Prevention and Brand Liability

Perhaps the riskiest area for full automation is security. Using computer vision to identify theft involves making accusations against individuals. Given the known history of bias in AI recognition systems, allowing an agent to autonomously flag or detain a customer is a direct path to discrimination lawsuits and viral reputational damage.

| Retail Process | Can it be technically automated? | Why it requires a Human-in-the-Loop |

| Theft Detection (Computer Vision) | Yes. Agents can analyse video feeds to flag “suspicious movements” and alert security or auto-lock doors. | Profiling & False Positives: AI often misinterprets innocent behaviors (e.g., a customer putting their own phone in their pocket, or the unique movements of a disabled customer) as theft. Automatically confronting a customer based on AI error invites severe legal consequences. Humans must review the footage before any action is taken. |

Conclusion: The “Burger” Human-in-the-Loop Solution

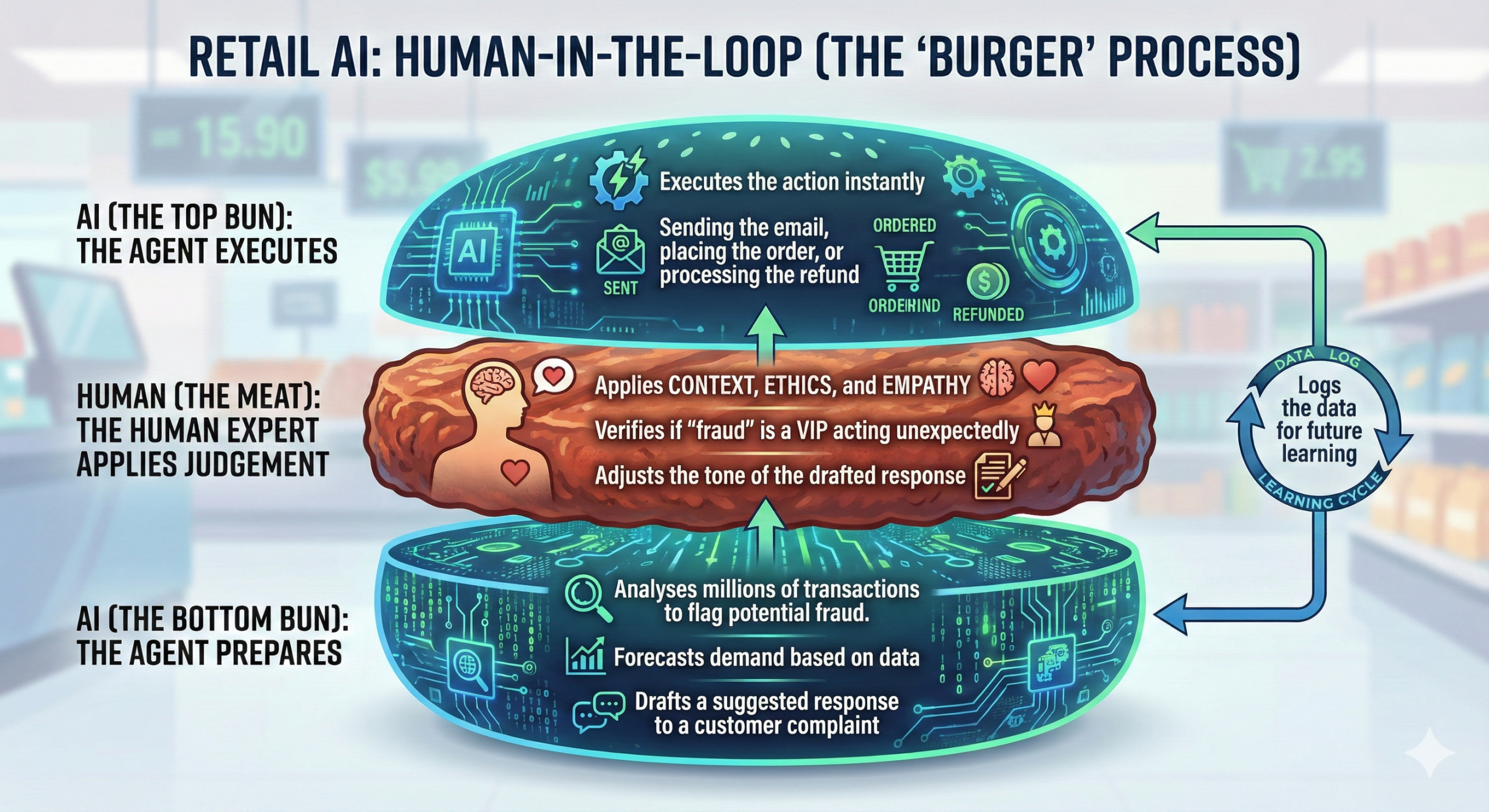

The trick is to deploy AI Agents where they handle volume and data, not always final judgment. The most successful implementation strategy will be what I will refer to as the “AI Burger” model:

- AI (The Bottom Bun): The agent does the preparation work. It analyses millions of transactions to flag potential fraud, forecasts demand based on data, or drafts a suggested response to a customer complaint.

- Human (The Meat): The human expert applies context, ethics, and empathy. They verify if the “fraud” is actually a VIP customer acting unexpectedly, or they adjust the tone of the drafted response.

- AI (The Top Bun): Once the human approves, the agent executes the action instantly sending the email, placing the order, or processing the refund and logs the data for future learning.

By keeping humans in the loop on critical processes, retailers can harness the speed of automation without sacrificing the trust of their customers.