When Amazon first introduced the Echo in 2014, it was revolutionary. The investment in subsequent years to role it out in all homes whilst not making any money from the Amazon Echo product line seemed a pointless business strategy. What were Amazon actually to gain. It looks even bleaker now as all the AIs we are using in our everyday lies are not made by Amazon.

But we know that Amazon is a very smart company. Google are closing the gap on OpenAI stole a march on with the launch of ChatGPT 3.5 in late 2022. What have Amazon been doing? Are the just watching and waiting and playing the Apple game of not going to the market first but going to the market with something better.

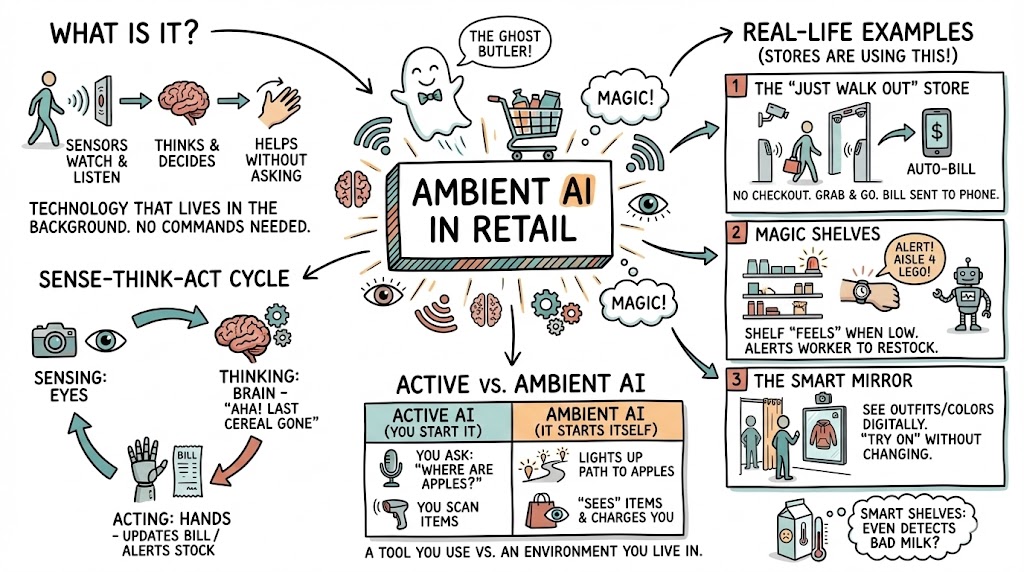

In 2014 Amazon wasn’t just launching a smart speaker; it was unveiling a vision of a future where technology seamlessly integrated into daily life, primarily through voice. At the heart of this ambition lay a powerful, seemingly inevitable goal: to make shopping as simple as speaking a request aloud. Amazon envisioned the Echo, powered by its Alexa AI, as the ultimate hands-free shopping assistant, capable of understanding needs, finding products, and completing purchases without a single click or tap.

The early promise of voice shopping on the Echo was compelling. Imagine running low on batteries – a simple “Alexa, order AA batteries” could have a fresh pack on its way. Forgot to add milk to the grocery list? “Alexa, add milk to the shopping list” would handle it instantly. For routine reorders of frequently purchased items, voice seemed like a natural and efficient interface. Amazon heavily promoted these use cases, betting that the convenience of voice would drive significant e-commerce transactions directly through their devices, further cementing their dominance in retail and placing them squarely in the centre of the smart home.

However, while the Echo found a place in millions of homes, the grand vision of pervasive voice shopping didn’t materialise with the speed or scale initially anticipated. Early voice shopping experiences, while functional for simple tasks, often felt clunky and limited. Discovering new products, comparing options based on nuanced criteria, or handling complex orders proved challenging for Alexa’s initial capabilities. The conversational flow wasn’t always natural, and users often reverted to the familiar visual interface of the Amazon app or website for anything beyond the most straightforward purchases.

Enter OpenAI. With the public emergence of highly advanced large language models (LLMs) and conversational AI like ChatGPT, the world witnessed a leap forward in natural language understanding and generation. These models demonstrated an unprecedented ability to engage in fluid, context-aware conversations, understand complex queries, and provide detailed, human-like responses. This stood in contrast to the more command-and-control nature of earlier voice assistants like Alexa, which, while excellent at executing specific pre-programmed tasks and answering factual questions, struggled with open-ended dialogue and subtle comprehension.

OpenAI’s progress effectively “stole a march” on companies like Amazon in the realm of truly natural, intelligent conversational AI. While Amazon had a massive lead in smart home device penetration and e-commerce integration, the core AI powering the interaction suddenly felt less sophisticated compared to the new standard set by generative AI. This highlighted the limitations that were holding back the original voice shopping dream – shopping is inherently conversational, often involving Browse, comparison, questions, and decision-making that requires a deeper level of understanding and interaction than simple commands allow.

Recognising this shift and the potential for advanced AI to finally unlock the full potential of voice commerce, Amazon is far from standing still. They are actively and aggressively working to bridge the gap and revitalise their Echo and Alexa platforms with next-generation AI capabilities.

Several key areas indicate Amazon’s current strategy to realise the dream of seamless voice shopping:

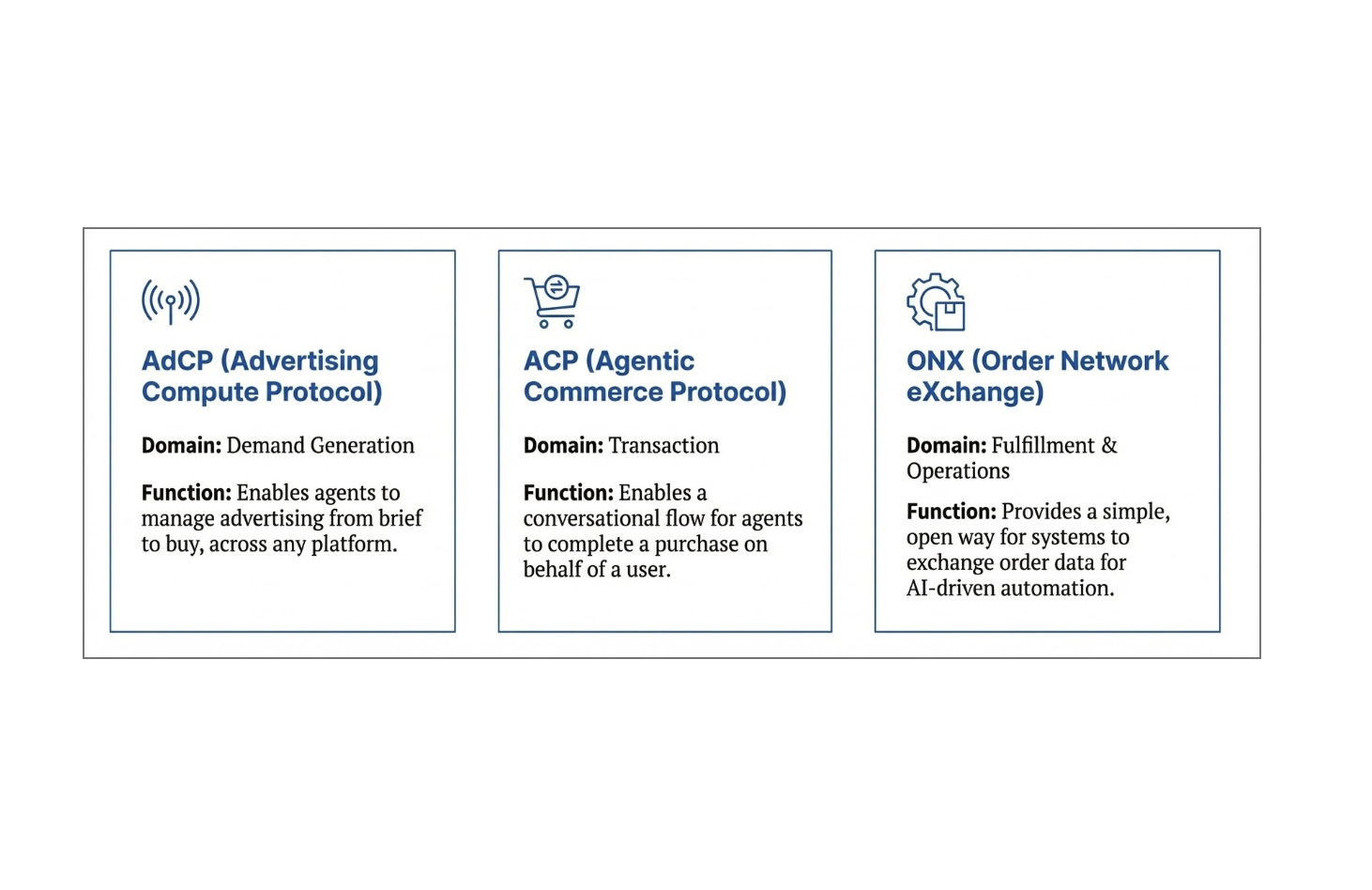

- Integration of Advanced AI Models: Amazon is heavily investing in and integrating more powerful AI, likely including their own proprietary LLMs (such as the work behind models like ‘Nova’ and others) into Alexa. This aims to move Alexa beyond simple command execution towards more natural, multi-turn conversations essential for complex shopping scenarios. The goal is for Alexa to better understand context, remember previous parts of a conversation, and handle more ambiguous or detailed requests.

- Enhancing Natural Language Understanding (NLU): Significant effort is being placed on improving Alexa’s ability to understand a wider range of language, accents, and conversational nuances. This is crucial for accurately interpreting shopping queries, including specific product attributes, comparisons, and subjective preferences.

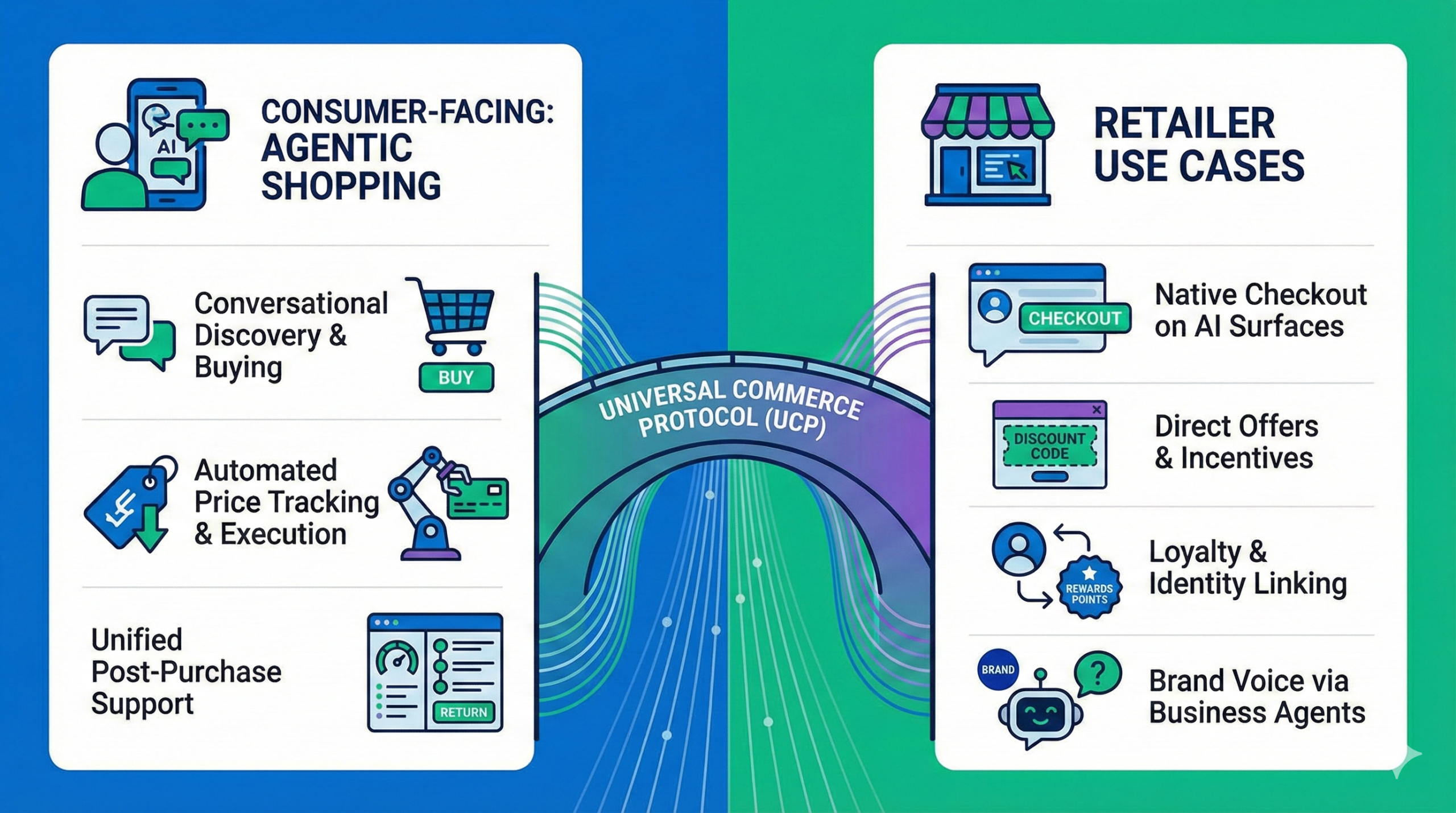

- Developing “Agentic” AI Capabilities: Recent announcements and investments, including expansions of the Alexa Fund into areas like “specialised AI agents,” suggest Amazon is building AI that can perform more complex tasks autonomously or with minimal guidance. For shopping, this could mean agents capable of researching products across categories, making informed recommendations based on user history and stated needs, and even managing entire purchase workflows. Amazon’s ‘Rufus’ conversational shopping assistant and ‘Buy for Me’ features are early examples of this direction within their existing apps, likely paving the way for deeper Echo integration.

- Improving the Shopping Experience Interface: While voice-first, Amazon is also exploring multi-modal experiences on devices like the Echo Show with screens. This acknowledges that for shopping, a purely auditory interaction can be limiting. Integrating visual information alongside voice responses allows users to see product details, images, and reviews, combining the convenience of voice input with the richness of a visual interface.

- Deeper Personalisation: Leveraging their vast data on customer purchase history and preferences, Amazon is working to make Alexa’s shopping recommendations highly personalised and predictive. Advanced AI can analyse past behaviour and real-time cues to suggest relevant products proactively or respond more effectively to open-ended requests like “What should I make for dinner tonight?” followed by suggestions for ingredients available on Amazon Fresh.

While OpenAI’s advancements have certainly raised the bar for conversational AI, Amazon possesses unique advantages: its massive e-commerce infrastructure, established customer base, extensive product catalogue, and a ubiquitous presence in smart homes through the Echo. By aggressively integrating advanced AI into Alexa, Amazon is clearly working to transform the Echo from a simple voice command device into a sophisticated, conversational shopping companion. The dream of effortless voice shopping isn’t dead; it’s being reimagined and rebuilt with the power of next-generation artificial intelligence. The race to dominate the future of conversational commerce is far from over.

It’s going to be fascinating. The question is, are Amazon going to be too slow or something big cooking?